I am deeply shocked that Science should have rejected publication of Dr. Richard Sproat's manuscript claiming that it "did not appear to provide sufficient general insight to be considered further for presentation to the broad readership of Science". I am posting the links here for readers to judge for themselves if the Science blokes were acting beyond their brief.

Are these guys really guardians of 'science'?

Such is the state of the so-called peer-reviewed journals that they do not seem to care to pursue the advancement of knowledge. It is unfortunate that editors shirk this basic responsibility of promoting discussions in an adventure of ideas which is the reason for 'publications'.

Richard's ideas should be deliberated upon in the context of reviews of Indus writing and many claims of 'decipherment'. One may or may not agree with Richard's classification of pre-literate 'writing' or 'symbol' systems but, in the interests of providing an even playing-field, all opinions related to early writing systems should be respectfully considered.

Here it is. The reference to Richard's monograph.

KalyanaramanMay 26, 2013

From the American Association for the Advancement (?) of Science (?)

« previous post |

The following is a guest post by Richard Sproat:

Regular readers of Language Log will remember this piece discussing the various problems with a paper by Rajesh Rao and colleagues in their attempt to provide statistical evidence for the status of the Indus “script” as a writing system. They will also recall this piece on a similar paper by Rob Lee and colleagues, which attempted to demonstrate linguistic structure in Pictish inscriptions. And they may also remember this discussion of my “Last Words” paper in Computational Linguistics critiquing those two papers, as well as the reviewing practices of major science journals like Science.

In a nutshell: Rao and colleagues’ original paper in Science used conditional entropy to argue that the Indus “script” behaves more like a writing system than it does like a non-linguistic system. Lee and colleagues’ paper in Proceedings of the Royal Society used more sophisticated methods that included entropic measures to build a classification tree that apparently correctly classified a set of linguistic and non-linguistic corpora, and furthermore classified the Pictish symbols as logographic writing.

But as discussed in the links given above, both of these papers were seriously problematic, which in turn called into question some of the reviewing standards of the journals involved.

Sometimes a seemingly dead horse has to be revived and beaten again, for those reviewing practices have yet again come into question. Or perhaps I should in this case say “non reviewing practices”: for an explanation, read on.

Not being satisfied by merely critiquing the previous work, I decided to do something constructive, and investigate more fully what one would find if one looked at a larger set of corpora of non-linguistic symbol systems, and contrasted them with a larger set of corpora of written language. Would the published methods of Rao et al. and Lee et al. hold up? Or would they fail as badly as I predicted they would? Are there any other methods that might be useful as evidence for a symbol system’s status? In order to answer those questions I needed to collect a reasonable set of corpora of non-linguistic systems, something that nobody had ever done. And for that I needed to be able to pay research assistants. So I applied for, and got, an NSF grant, and employed some undergraduate RAs to help me collect the corpora. A paper on the collection of some of the corpora was presented at the 2012 Linguistic Society of America meeting in Portland.

Then, using those corpora, and others I collected myself, I performed various statistical analyses, and wrote up the results (see below for links to a paper and a detailed description of the materials and methods). In brief summary: neither Rao’s nor Lee’s methods hold up as published, but a measure based on symbol repetition rate as well as a reestimated version of one of Lee et al.’s measures seem promising — except that if one believes those measures, then one would have to conclude that the Indus “script” and Pictish symbols are, in fact, not writing.

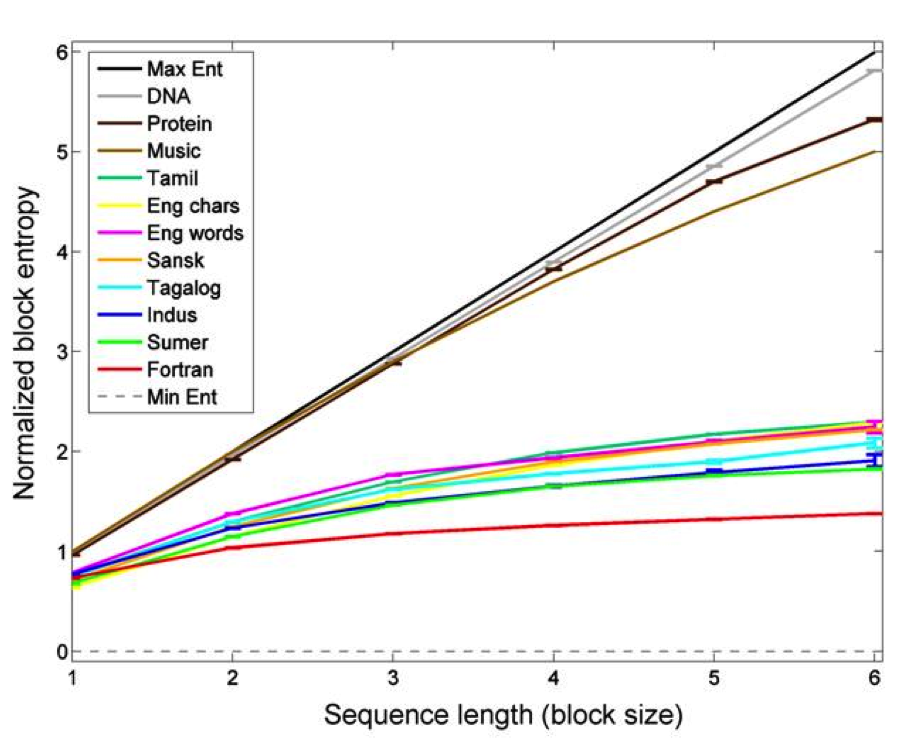

So for example in a paper published in IEEE Computer (Rao, R., 2010. Probabilistic analysis of an ancient undeciphered script. IEEE Computer. 43~(3), 76–80), Rao uses the entropy of ngrams — unigrams, bigrams, trigrams and so forth, which he terms “block entropy” — as a measure to show that the Indus “script” behaves more like language than it does like some non-linguistic systems. He gives the following plot:

For this particular analysis Rao describes exactly the method and software package he used to compute these results, so it is possible to replicate his method exactly for my own data.

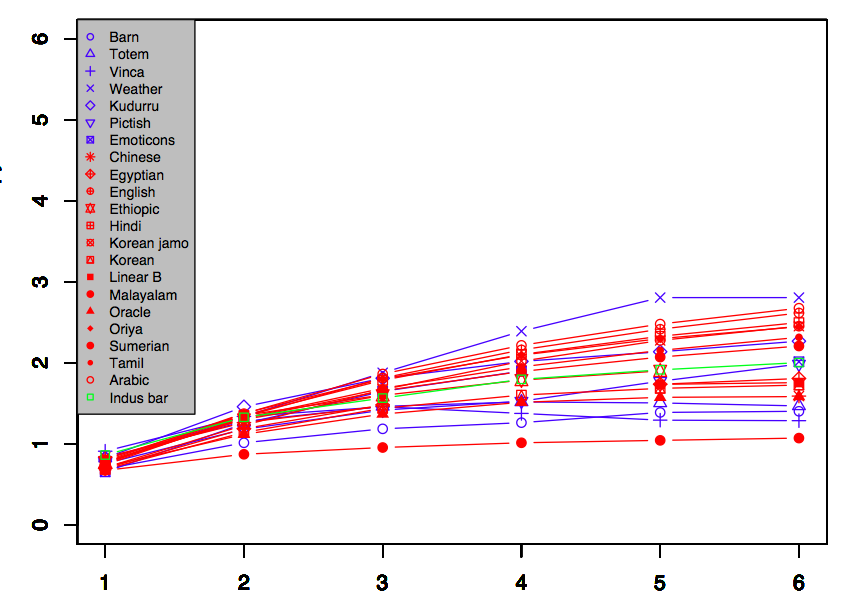

The results of that are shown below, where linguistic corpora are shown in red, non-linguistic in blue, and for comparison a small corpus of Indus bar seals in green. As can be seen, for a representative set of corpora, the whole middle region of the block entropy growth curves is densely populated with a mishmash of systems, with no particular obvious separation. The most one could say is that the Indus corpus behaves similarly to any of a bunch of different symbol systems, both linguistic and non-linguistic.

In this first version of the plot, I use the same vertical scale as Rao’s plot for ease of comparison:

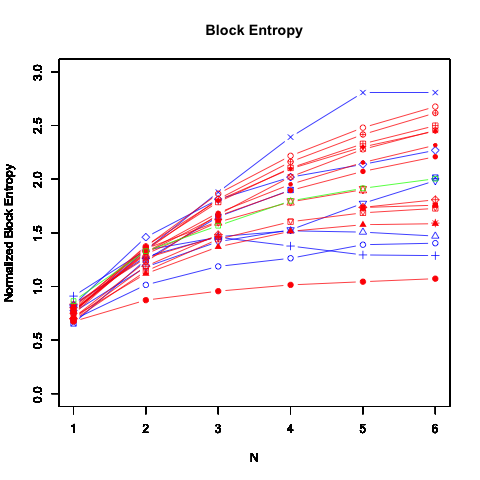

Here's a version with the vertical scale expanded, for easier comparison of the datasets surveyed — again, non-linguistic datasets are in blue, known writing systems are in red, and the Indus bar-seal data is in green:

With Lee et al’s decision tree, using the parameters they published, I could replicate their result for Pictish (a logographic writing system), but also it also classifies every single one of my other non-linguistic corpora — with the sole exception of Vinča — as linguistic.

One measure that does seem to be useful is the ratio of the number of symbols that repeat in a text and are adjacent to the symbol they repeat (r), to the number of total repetitions in the text (R). This measure is by far the cleanest separator of our data into linguistic versus nonlinguistic, with higher values for r/R (e.g. 0.85 for barn stars, 0.79 for weather icons, and 0.63 for totem poles), being nearly always associated with non-linguistic systems, and lower values (e.g. 0.048 for Ancient Chinese, 0.018 for Amharic or 0.0075 for Oriya) being associated with linguistic systems.

However this is partially (though not fully) explained by the the fact that r/R is correlated with text length, and non-linguistic texts tend to be shorter. This and other features (including Rao et al. and Lee et al.’s measures) were used to train classification and regression trees, and in a series of experiments I showed that if you hold out the Pictish or the Indus data from the training/development sets, the vast majority of trained trees classify them as non-linguistic.

To be sure there are issues: we are dealing with small sample sizes and one cannot whitewash the fact that statistics on small sample sizes are always suspect. But of course the same point applies even more strongly to the work of Rao et al. and Lee et al.

Where to publish such a thing? Naturally, since the original paper by Rao was published in Science, an obvious choice would be Science. So I developed the result into a paper, along with two supplements S1, S2, and submitted it.

I have to admit that when I submitted it I was not optimistic. I knew from what I had already seen that Science seems to like papers that purport to present some exciting new discovery, preferably one that uses advanced computational techniques (or at least techniques that will seem advanced to the lay reader). The message of Rao et al.’s paper was simple and (to those ignorant of the field) impressive looking. Science must have calculated that the paper would get wide press coverage, and they also calculated that it would be a good idea to pre-release Rao’s paper for the press before the official publication. Among other things, this allowed Rao and colleagues to initially describe their completely artificial and unrepresentative “Type A” and “Type B” data as “representative examples” of non-linguistic symbol systems, a description that they changed in the archival version to “controls” (after Steve Farmer, Michael Witzel and I called them on it in our response to their paper). But anyone reading the pre-release version, and not checking the supplementary material would have been conned into believing that “Type A” and “Type B” represented actual data. All of these machinations were very clearly calculated to draw in as many gullible reporters as possible. I knew, therefore, what I was up against.

So, given that backdrop, I wasn’t optimistic: my paper surely didn’t have such flash potential. But I admit that I was not prepared to receive this letter, less than 48 hours after submitting:

Dear Dr. Sproat:

Thank you for submitting your manuscript “Written Language vs. Non-Linguistic Symbol Systems: A Statistical Comparison” to Science. Our first stage of consideration is complete, and I regret to tell you that we have decided not to proceed to in-depth review.

Your manuscript was evaluated for breadth of interest and interdisciplinary significance by our in-house staff. Your work was compared to other manuscripts that we have received in the field of language. Although there were no concerns raised about the technical aspects of the study, the consensus view was that your results would be better received and appreciated by an audience of sophisticated specialists. Thus, the overall opinion, taking into account our limited space and distributional goals, was that your submission did not appear to provide sufficient general insight to be considered further for presentation to the broad readership of Science.

Sincerely yours,

Gilbert J. Chin

Senior Editor

Senior Editor

It is worth noting that this is more or less exactly the same lame response as Farmer, Witzel and I received back in 2009 when we attempted to publish a letter to the editor of Science in response to Rao et al.’s paper:

Dear Dr. Sproat,

Thank you for your letter to Science commenting on the Report, titled "Entropic Evidence for Linguistic Structure in the Indus Script." I regret to say that we are not able to publish it. We receive many more letters than we can accommodate and so we must reject most of those contributed. Our decision is not necessarily a reflection of the quality of your letter but rather of our stringent space limitations.

We invite you to resubmit your comments as a Technical Comment. Please go to our New Submissions Web Site at www.submit2science.org and select to submit a new manuscript. Technical Comments can be up to 1000 words in length, excluding references and captions, and may carry up to 15 references. Once we receive your comments our editors will restart an evaluation for possible publication.

We appreciate your interest in Science.

Sincerely,

Jennifer Sills

Associate Letters Editor

Associate Letters Editor

We never did follow up on the invitation to submit a Technical Comment, but then we know very well what would have happened if we had.

So let’s get this straight: an article that deals with a 4500-year old civilization that most people outside of South Asia have never heard of is of broad general interest if it purports to use some fancy computational method to make some point about that civilization. Forget the fact that the method is not fancy, is not even novel, and is in any case naively applied and that the results are based on data that are at the very least highly misleading. But if a paper comes along that is based on much more solid data, tries a variety of different methods, shows — unequivocally — that the previous published methods do not work, and even reverses the conclusions of that previous work, then that is of no general interest.

I think the conclusion is inescapable that Science is interested primarily if not exclusively in how their publications will play out in the press. Presumably the press wouldn’t be that interested in the results of my paper: too technical, not good for sound bites. And certainly the conclusions might seem unpalatable: among other things it means that the earlier paper that Science did publish was wrong.

But then that’s what science is about isn’t it? Science advances sometimes by showing that previous work was in error. Science is published by the American Association for the Advancement of Science, but it’s hard to see science, much less advancement in their editorial policies.

More generally, why is it that in the past 5 years (at least), none of the relatively few papers that Science published in the area of language stands up to even mild scrutiny by linguists on forums such as Language Log and elsewhere? I have seen explanations to the effect that because they do not publish on language much, they don’t really have a good crop of reviewers for papers in that area. Certainly that does seem to have been true. The question is why it continues to be true: doesn’t an organization that claims to promote the advancement of science owe it to its readership to get qualified experts as peer reviewers for papers in an area that they cover; and to actually listen to the few qualified reviewers they do get (cf. here). If they aren’t prepared or aren’t able to do this, then perhaps it would be better if they got out of the business of publishing papers related to language entirely.

But then again we know what their real goal is, don’t we? We know that whenever they review a paper it’s always with an eye to what Wired Magazine orThe New York Times are going to do with that paper. So when they see a paper that uses some computational technique to derive a result that “increases the likelihood” that India’s oldest civilization was literate; or a paper that claims to show evidence that we can trace language evolution back to Africa 70K years ago (see here); they see the obvious opportunity for publicity. Science gets yet another showing in the popular press, the lay reader will be duped by the dazzle of the technical fireworks that he or she does not understand anyway, and can in any case be expected to quickly forget the details of: All they might remember is that the paper looked “convincing” when reported, and of course that it was published in Science. It goes without saying that the opinions of the real experts can safely be ignored since those will only be vented in specialist journals or in informal forums like Language Log. Science will apparently never open its pages to the airing of expert views that go against their program.

And thus science (?) advances (?).

The above is a guest post by Richard Sproat.

12 Comments »

RSS feed for comments on this post· TrackBack URIhttp://languagelog.ldc.upenn.edu/nll/?p=4652,

G said,

Richard Sproat said,

Piotr Gąsiorowski said,

the other Mark P said,

the other Mark P said,

D.O. said,

Piotr Gąsiorowski said,

Richard Sproat said,

Richard Sproat said,

Piotr Gąsiorowski said,

Piotr Gąsiorowski said,

http://en.wikipedia.org/wiki/GFAJ-1

Liberty said,